Deep Dive

Artificial Intelligence: The Acceleration of Risk and Legal Complexity & What To Do About It

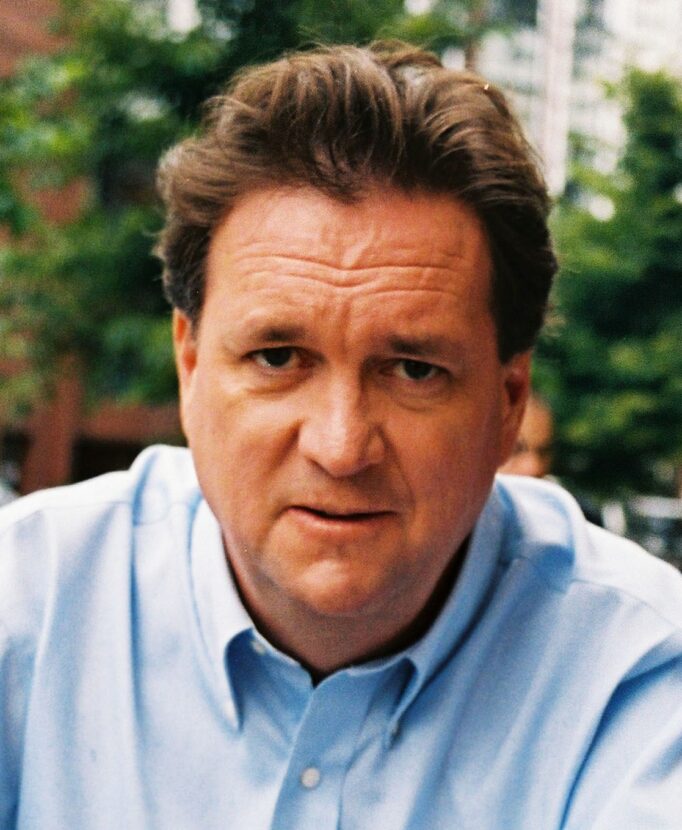

Global futurist, Jim Carroll, shares insights into the acceleration of risk and legal complexity due to artificial intelligence, and what organizations can do to mitigate their risk.

What are we going to do the first time someone wants to have an AI chatbot offer evidence in a court trial? Is the evidence reliable? Will it be trustworthy, or might it include some invalid or plainly incorrect misinformation? Will lawyers on both the plaintiff and defendant side be prepared to deal with these thorny, complex new legal issues? This is not a mythical issue – indeed, we will probably see this challenge arrive before we know it.

What are association executives going to do as ChatGPT and other technologies begin to chip away at the knowledge base within their profession? What will they do to deal with the absolute explosion of new knowledge that is already emerging – and how will they prepare their members for that? What do they need to do with professional education so that careers don’t disappear – but evolve at the speed of AI? Not only that – do they have a concise view of the new opportunities and challenges all this fast moving AI technology is presenting to their industry?

What will corporate risk managers and legal staff do to deal with an absolute flood of new legal risk issues, involving complex new legal issues that did not previously exist? How will they manage fast emerging trademark, copyright, intellectual property and other new issues? What will they do as artificially-generated ‘misinformation-at-scale’ floods their world posing complex new defamation, libel and other challenges?

What will regulators and politicians do as AI comes to challenge the very foundation of so many existing laws and regulations, at the same time that it poses vast new economic, geopolitical and societal challenges and opportunities? How do we move forward an already slow moving government process to deal with blazing fast AI technologies?

These are not theoretical questions – these are new realities that we have to begin thinking about right now, at this very moment – because the future of AI is happening faster than we think.

Most of us already know that – the last few months have been a whirlwind with new AI technologies.

The big question is – what do we do about it?

The Unique Legal and Risk Challenge of Technology

I’ve had some unique experiences with questions like this.

Over 20 years ago, I was very heavily involved as an expert witness in a Canadian federal court case that involved among other things, intellectual property, trademark, Internet broadcasting, the origins of the Internet, and most importantly, the evidentiary value of the Internet in the courtroom. (This was one of 5 cases I was involved with; two others involved my insight as an expert witness to two successful Leaves to Appeal to the Supreme Court of Canada.)

Held in Canadian Federal Court – Trial Division, the case between ITV TECHNOLOGIES, INC. and the plaintiff WIC TELEVISION LTD. consumed pretty much one year of my life, in addition to the keynotes I was involved in at the time.

I was there, because up to that time, I had already written 34 books about the Internet and technology trends throughout the 90s, which launched me into my global futurist role. My role was to provide detailed insight into the origins of the Internet, and the complex issues it was providing in this very complex legal case.

I was there to testify about issues of trademark, copyright, the origin of domain names, Internet broadcasting, and generally, how the Internet works.

But a key part of my testimony was unique for the time, and had to do with whether the Internet could be used in the courtroom as actual evidence – specifically to the question of “the evidentiary value of the Internet in court.” In essence, the issue was whether the information on a webpage was accurate or false, and whether it could be relied upon in the same way, and meet the standards of other evidence. This was a unique moment in the world of law, and still remains somewhat unresolved to this day.

(As an aside, I actually had 3 days of testimony in addition to all the affidavits I prepared. And I will say this – I have absolutely no issues getting up in front of 10,000 people on the massive stage in Las Vegas with a keynote about the future, but I am willing to admit that being in a court room, testifying, in front of a judge, three lawyers, and two spectators is one of the most difficult things I’ve ever had to do! I doubt that I will ever want to do it again!)

The issues that I covered on the stand and in the affidavit that I provided gave me a very early glimpse into the very complex issues that emerge into a new world — what happens when fast moving technology runs up against a slow legal process. It provided me insight into the fact that by and large, even twenty years ago, society, corporations, associations, and government seemed to be ill prepared to deal with the speed with which new challenges were emerging.

Keep in mind this all happened 21 years ago. Now, in the context of the emergence of ChatGPT and other technologies, we must consider that if we were ill-prepared then, we are even more challenged today.

Looking back, the testimony I offered with respect to the issues at hand now seem very quaint in light of the massive, emerging legal issues surrounding artificial intelligence It’s pretty evident that at some point in time soon, someone will attempt to introduce an artificial intelligence chabot, or information generated from an AI, as evidence.

How will courts react?

This is but one of the massively complex legal issues that are unfolding in a world that seems ill prepared to cope with the speed of this new technology is coming at us.

And that’s what we need to focus on.

The Emergence of New, Unknown Risk

Last fall, I spoke in Switzerland at the global risk management conference for Zurich Insurance. I was there, as a futurist, to provide insight into the new emerging risks that corporate organizations and associations should be thinking about. The room was full of corporate risk managers.

In addition to a long list of emerging technological, societal and other risks, I also offered two key observations:

“EVERY NEW TECHNOLOGY IS ULTIMATELY USED FOR A NEFARIOUS TERRIBLE PURPOSE, ACCELERATING SOCIETAL RISK“

AND

“NEW TECHNOLOGIES WILL NOW ALWAYS EMERGE FASTER THAN THE ABILITY OF ORGANIZATIONS TO MANAGE THE RISK IT REPRESENTS!”

My key point for this room full of corporate risk managers was that we are now in a situation in which new unknown risks are now emerging with new technologies and complex issues at such a speed that we don’t even yet know what they are – and don’t have any idea as to how to manage them.

In effect, it’s not just the identified risks that are coming that we need to worry about – it’s the new unknown risks. Not just that, but it’s the speed at which these new unknown risks are coming at us.

It was barely one month later that ChatGPT appeared on the scene, and our world of legal issues and risk management forever changed. And it is at this very moment that government leaders, business executives, association executives, and just about everyone else has started to think about these risks, and what to do about them.

There are no easy answers – and precious little time to sort it out.

The Acceleration of All Things Legal

All of us need to understand the depth of these new issues and what they present in terms of new challenges and opportunities, peril and risk.

And to start out, we should appreciate that these AI risks and legal issues have already been with us for a time. What better way to assess that than to ask an AI itself – Microsoft’s Bing, which is based on ChatGPT4.

Question:

“Provide me a concise summary, with details, of the various new legal and risk issues that have emerged with AI technology prior to the arrival of ChatGPT, and what organizations have had to do to try to deal with these issues.”

Bing ChatGPT:

“AI technology has raised various legal and risk issues that have challenged the existing laws and regulations. Some of these issues are:

Algorithmic transparency: AI systems often operate as black boxes, making it difficult to understand how they reach certain decisions or outcomes. This can affect the rights and interests of individuals and organizations who are subject to AI decisions, such as in credit scoring, hiring, or medical diagnosis. Algorithmic transparency requires AI systems to be explainable, interpretable, and accountable for their actions and results.

Cybersecurity vulnerabilities: AI systems can be susceptible to cyberattacks, such as data breaches, hacking, or manipulation. This can compromise the security, privacy, and integrity of the data and systems involved, and potentially cause harm to individuals or organizations. Cybersecurity vulnerabilities require AI systems to be robust, resilient, and secure against malicious threats.

Unfairness, bias, and discrimination: AI systems can reflect or amplify the biases and prejudices of their developers, users, or data sources. This can result in unfair or discriminatory outcomes for certain groups or individuals, such as in facial recognition, criminal justice, or social media. Unfairness, bias, and discrimination require AI systems to be fair, inclusive, and respectful of human dignity and diversity.

Lack of contestability: AI systems can make decisions or recommendations that affect the rights and interests of individuals or organizations, such as in insurance claims, tax audits, or health care. However, there may not be adequate mechanisms or processes to challenge or appeal such decisions or recommendations, especially if they are based on complex or opaque algorithms. Lack of contestability requires AI systems to be subject to human oversight and intervention, and to provide effective remedies for grievances.

Legal personhood issues: AI systems can act autonomously and independently of human control or supervision. This can raise questions about the legal status and responsibility of AI systems and their creators or users. For example, who owns the intellectual property rights of an AI-generated work? Who is liable for the damages caused by an AI-driven car? Who is accountable for the ethical implications of an AI-enabled weapon?3. Legal personhood issues require clear and consistent definitions and frameworks for the legal rights and obligations of AI systems and their stakeholders.“

Woah. That’s some complex stuff! And at this point I asked Bing to stop because it kept on going with a long list!

Proceeding further, I asked where this goes next. After all, what’s a good futurist these days without a good futurist-AI friend?

Question:

“Now, with the arrival of ChatGPT and LLM (Large language models) what new risks are introduced?”

BingChatGPT4 responded:

“Large language models (LLMs) such as ChatGPT and LLM are AI systems that can generate natural language texts based on large amounts of data. They have many applications and benefits, but they also introduce new risks that need to be addressed. Some of these risks are:

Biases and ethical concerns: LLMs can reinforce biases present in their training data. This could lead to ethical issues such as discriminatory or misleading content that tarnish a business’ reputation or harm individuals or groups. Biases and ethical concerns require LLMs to be trained and evaluated on diverse and representative data, and to be aligned with human values and norms.

Information hazards: LLMs can leak private or sensitive information from their training data or generate plausible but false information that could harm individuals or organizations. Information hazards require LLMs to be designed and deployed with data protection and security measures, and to be transparent and accountable for their sources and outputs.

Misinformation harms: LLMs can produce poor, false, or misleading information that could affect the quality and reliability of knowledge and information. This could have negative consequences for individuals or organizations who rely on LLMs for decision making, especially in sensitive domains such as health, education, or finance125. Misinformation harms require LLMs to be accurate, consistent, and verifiable, and to provide sources and evidence for their claims.

Malicious uses: LLMs can be used by actors who try to cause harm or exploit others by generating deceptive, manipulative, or harmful content. This could include spam, phishing, propaganda, fake reviews, impersonation, or cyberbullying. Malicious uses require LLMs to be regulated and monitored for potential abuse, and to provide mechanisms for detection and prevention of harmful content.“

Double yikes! This is complex stuff. Are we ready? I had to stop Bing’s AI because it kept on going!

Accelerating Issues

All of this is now happening in which we were already dealing with a massive explosion in the base of legal knowledge and risk management issues. I’ve spoken at the partners conferences of many major legal firms, as well as a variety of risk and safety oriented conferences. In all of these events, I’ve stressed several things when it comes to the future of risk, including legal risk:

- There’s going to be more of it

- It’s going to come at us with greater speed and intensity

- Unless we are watching really carefully, we won’t be aware of it until we have to deal with it

- The implications of not dealing with new risks will become more severe as time moves on

- It’s not the known risk that will get us – it’s the unknown risk that matters

- There is more new unknown risk coming at us

- It’s coming at us even faster than we know

- And in that context, that means there are a lot of new risks that are yet to come that we don’t yet understand and that we don’t yet know how to manage

Leaders and organizations must manage the rapid emergence of new risks and escalating risk realities! But we aren’t very good at that – looking at one of my slide decks, I’ve also emphasized these points:

- Every new technology involves new risk

- We don’t fully understand the new risk that comes with this technology.

- We tend to roll out new technologies before we think through the implications of what risk it represents.

- People then discover this new risk, and take advantage of it, before we are prepared to deal with it

- We tend to get more excited about the opportunity of new technology than we do about the process of managing the risk that represents!

- We don’t really get any money allocated for the management of the risk with new technology at the same time that we spend money for that new technology

How do you mitigate against risk when you don’t know what that risk might be? How do you guard against legal issues that don’t even yet exist? How do you guard intellectual property for products you don’t even yet know you will invent? That’s the challenging reality of our new world of risk today.

Want some fun? At one of these events, I put up a list of 40 new areas of legal risk management that did not exist 10 years ago. Protecting shared 3D printing models of your new products that could be easily counterfeited. Drone technology and surveillance law. Genetic counselling laws, LGBTQ issues, fantasy sports league management, organic certification, and laws involving cannabis use in the workplace and place of business.

Now add AI law into the mix.

Moving Forward? Government, Associations, Corporations and Professions

So what do we need to do about all this?

Obviously, we need to figure it out – and that involves getting on top of the trends, understanding it and assessing what it means. Knowledge and insight is paramount.

And that needs to be done with a reality that it’s moving faster than fast

As I write this blog post, things are moving incredibly fast. We are already seeing all kinds of new legal actions within the creative, trademark copyright, and other industries. Federal government agencies are rapidly trying to come to grips with what we do with what is happening Laws and regulations that, even when adapted to the reality of a global conductivity, the Internet, not yet seems structured to deal with the new complex challenges of our artificial intelligence age

Legislators need to get on side, and this seems to be a challenge, given the polarized nature of global politics. I was extremely encouraged, and I listened to “The Daily” podcast from the New York Times, where they interviewed one US senator, a 73-year-old man, who has taken the time to take a couple of artificial intelligence courses on his way to an AI degree. He is doing so that he could understand the nature of what is happening, and the legislative action that might be required. That’s a good sign, because the very same newspaper recently ran an article that acknowledged that Congress was ill-equipped to deal with the speed at which events are unfolding.

Association executives, CEOs and CxOs, corporate risk, and legal managers need to understand and constantly assess and reassess the nature of the risk that AI is now presenting in their industry, and the new risk issues they will be directly challenged with. They need to think about their skills and knowledge issues that are arriving in this complex, new world, and what they need to do to deal with that. They must assess the potential disruptive impact of AI, and the strategic pathways forward.

The best way to start is to do a very detailed industry and company specific overview of the impact of this new fast world. This should be done by keeping in mind that this is not just about ChatGPT, but so much more. I’ve been busy preparing for my clients and industry-by-industry assessment of a vast number of issues that AI presents – both in terms of what has happened already, as well as with what is yet to come. This needs to be done on a continuous basis, because things are evolving so fast.

Similarly, association executives and leaders need to ensure that, with AI being the hottest topic on the speaking circuit in 2023, they aren’t just focused on ChatGPT but also all the other elements of AI. ChatGPT is getting all the attention, and will have profound implications , but there is so much more that has already been happening, and so much more yet to come. They need to ensure that their members, and in the industry they professionally represent, have good detailed insight as to where they should go, how this will impact them, and what they need to do about it. I suspect this will be a Main Stage and leadership meeting topic for many years to come.

In my 30 years as a futurist I have never seen a technology and trend explode at the speed with which AI is unfolding. I am excited for the opportunity it presents, and yet at the same time, I am terrified by what it represents, particularly for organizations and associations, ill prepared to manage what comes next

Over in Switzerland in the Fall I closed my keynote with two comments.

First:

Companies that do not yet exist will build products that are not yet conceived, based on ideas that have yet been generated, using materials not yet invented, with manufacturing methods that have not yet been conceived. Are you ready for the new world of disruption?”

And then:

“Risks that don’t yet exist will come from products, inventions and ideas that are not yet conceived, based on imaginative concepts not yet imagined…. Are you ready for the new world of risk?”

Welcome to your future!

Events aren’t easy, but working with WSB is. WSB works with thousands of respected influencers, thought leaders, and speakers each year and our experienced sales team is committed to the success of your event. For more artificial intelligence speaker ideas, please contact us.